Synamedia Iris will be taking the stage at CTV Ad Days Europe 2024, featuring Guy Southam as our distinguished speaker! Join us on May 30 at 1:30 PM UK time for a 40-minute session that delves into “The reinvention of operator-powered addressable advertising”.

Key topics to be covered:

- From STB Linear to Fully Convergent TV Addressability: Witness the transformation from set-top box linear to fully convergent TV addressability.

- Pay TV Operator Integration of FAST Channel Inventory: Explore the seamless integration of FAST channel inventory by Pay TV operators.

- Enablement of Broadcaster Linear Addressable in Europe: Uncover the enablement of broadcaster linear addressable capabilities in the European market.

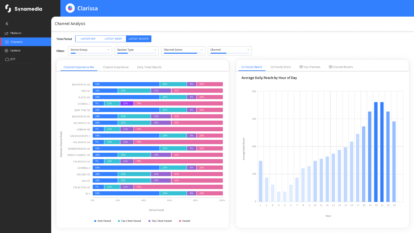

- Audience Targeting – Pre-built and Custom Segmentation: Guy Southam will guide you through the art of audience targeting with both pre-built and custom segmentation strategies.

Don’t miss this chance to be part of the future of connected TV advertising. Fill the form below if you want to engage with our experts onsite, Guy Southam and Jeremy Bradley.